Evidence-based education is dead- long live evidence-informed education: Thoughts on Dylan Wiliam

The following blog post was first featured on the TES website in April 2015. Unfortunately due to a technical problem, it didn't survive an update. I've tracked and captured it using a site that archives web page snapshots, in response to several requests from people who wanted to link to it in their own writing. I present it here, exactly as it was, so please bear in mind the context of the time in which it was written.

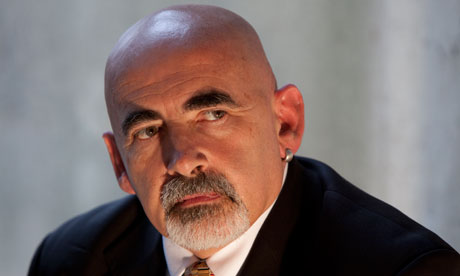

Tom

Evidence-based education is dead — long live evidence-informed education: Thoughts on Dylan Wiliam

Dylan Wiliam, high priest of black boxes and emeritus professor at the Institute of Education, says in this week’s TES that teaching cannot, will never be a research-based, or research-led profession. And he’s absolutely right.

Given that he’s the one of the handful of educational researchers that many teachers could name, and I happen to run researchED, education’s grooviest new hipster/nerd movement, this may appear to be the greatest act of turkeys voting for Christmas imaginable. Let me scrape the cranberry off my wings and I’ll describe why Wiliam is spot on, in a way that needs to be repeated often and loudly.

Education won’t be cured by research alone, nor should we expect it to. BUT HANG ON,TOM I see you type with the caps lock on, ISN’T researchED ALL ABOUT THAT? No, and it never was. Funnily enough, most people forget that my experience of research was initially almost entirely negative; I’d swallowed so much snake oil in VAK and similar that I retched my rage into a book, Teacher Proof, in which I flicked Vs at most of what I’d been told solemnly was gospel.

It was only through my work with researchED, and reaching into the research ecosystem, that I got a fairer picture of what was really going on: good, bad and ugly research papers flying around in a tornado, like a library with no index. Quality was no guarantee of status. I saw a huge opportunity for teachers, researchers, and every other inhabitant of the research ecosystem to talk and listen to each other. ResearchED was born, and it’s what I’ve tried to do since: raise research literacy in the teaching profession, promote conversations between communities, cast out the bad, encourage the good.

And Dylan Wiliam has been an important part of that conversation. Here’s my summary of what he said in this week’s TES, and why he was right to say it:

1. Research can’t tell you what could be

Wiliam uses grouping by ability as an example. Research seems to indicate that this "produces gains for high achievers at the expense of losses for low achievers". In other words, it helps one group to the detriment of another. So, for many, this means they should stop setting or streaming. But what this doesn't tell us, Wiliam points out, is why this happens, or ways in which grouping by ability might produce more desirable results. Top sets are often assigned strong teachers, or more senior teachers, which could explain the outcome gap. I remember taking a bottom set through GCSE for two years; normally I was assigned the top sets. At the end of the two years I had (on paper, that most chameleon of awards) value-added scores through the roof. That’s because it’s a lot easier to get a kid from a U to a F than it is to turn an A into an A*. So setting has hidden issues that are only apparent at a classroom and school level. Maybe grouping by ability can work, maybe it can’t. It depends on context.

One significant issue is that studies of human behaviour such as the Education Endowment Foundation Teaching and Learning Toolkit, requires an enormous amount of contextual nuance in order to appreciate what it is really telling us. Large randomised controlled trials, huge metastudies, are extremely useful ways of finding things out, but they have equally large limitations. They can, if taken at headline value, conceal huge amounts of detail, buried on page 2, 3 and beyond. According to the Toolkit, Teaching Assistants are an expensive intervention to make for children, with low or limited impact. Because of that, may schools have simply dropped TAs from their staff cohort, as if the matter were settled. But we all know TAs who have transformed the learning of children, even if taken as a cohort their impact is averaged out to a low value.

These studies can tell us the big picture of what is happening, but they often fail to suggest why, or in what conditions and contexts valuable results are obtained. In short, they can’t tell us if this precise TA will have an impact on that class, or child. As Wiliam mentions, this is because such studies frequently fail to take into account the relationship between pupil and educator. Interventions aren’t aspirins; they occur between people, and forgetting that the relationship between people is fundamental to the outcomes of the intervention is as shortsighted as assuming that two people will fall in love because they're available and fertile.

2. Research is rarely clear enough by itself to guide action

Wiliam talks about feedback (maybe you heard of Assessment for Learning? He might have had a hand in that…). Feedback, broadly speaking, has a positive correlation with improved learning outcomes. But any kind of feedback? And how does that feedback land with the student? Telling a child who hates you they’ve done well with their use of metaphor might well discourage them from using it again. Telling a child who hates public praise that they’re the cream in your assessment coffee could lead to unexpected results. How you give feedback, and the relationship between the feeder and the fed is crucial. But most studies of feedback simply look at feedback as a collective entity, a solid and coherent intervention.

The headline of this piece is correct, but needs unpacking: it’s deluded to expect research to form the basis of teaching; it isn’t deluded to use it to improve the practice of education. For a start Wiliam would be talking himself out of a job. He even closes the third act of his piece with, "So how do we build expertise in teachers? Research on expertise in general indicates that it is the result of at least ten years of deliberate practice…"

Teachers should be rightly suspicious when they’re told ‘research proves’. In order to do that, it’s necessary for a significant portion of the teaching community to be reasonably research literate — enough to generate a form of herd immunity — both in content and methodology. Then, they can reach out to and engage with research which can assist their decision making. I say ‘assist’ carefully.

That doesn’t mean make their decisions for them; that doesn’t mean it’s a trump card. Teachers need to interact with what the best evidence is saying and translate it through the lens of their experience. If it concurs, then that itself is significant. If it clashes, then that’s an interesting launch platform for a conversation. Teaching is not, and can never be a research-based, or research-led profession. Research can’t tell us what the right questions to ask are, nor can it authoritatively speak for all circumstances and contexts. That’s what human judgment, nous and professional, collective wisdom is for. But it can act as a commentary to what we do. It can expose flaws in our own biases. It can reveal possible prejudices and dogma in our thinking and methods. It can assist bringing together the shared wisdom of the teaching community. It can act a commentary to what we do. It can and should be nothing less than the attempt to systematically approach what we know about education, and understand it in a structured way.

Teaching can — and needs to be — research informed, possibly research augmented. The craft, the art of it, is at the heart of it. Working out what works also means working out what we mean by ‘works’, and where science, heart and wisdom overlap and where they don’t.

Evidence-based education is dead. Let us never speak of it again.

Not sure I follow here. Good quality research with clear methodology which has been thoroughly read and understood is easily used. The issue is that little research meets this criteria.

ReplyDeleteI find the TA example interesting. To my understanding the research is limited but of high quality. The main piece being the DISS report by p Blatchford, have you read this? It is not a RCT but a longitudinal observational study which argue persuasively that TA interactions with students differ from Teacher interactions. Often this leads to alternative rather then additional support. This should have lead to a serious rethink on how we use support. As I understand most of Blatchford's follow up work is on alternatives such as Response to intervention and ideas on how to utilise,train and direct TAs. The conclusion to reduce support to save costs can be justified in this context (especially if we consider alternative reductions) even if the idea of teaching my classes without them is personally worrying. (31 16-19 students all but two with EHCPs).

Not sure that was a great example except how we are all vulnerable to places personal experience over evidence.